publications

publications by categories in reversed chronological order. generated by jekyll-scholar.

2024

-

As generative models improve, people adapt their promptsEaman Jahani, Benjamin Manning, Joe Zhang, Hong Yi Tu Ye, Mohammed Alsobay, and 3 more authorsarXiv [cs.HC], 2024

As generative models improve, people adapt their promptsEaman Jahani, Benjamin Manning, Joe Zhang, Hong Yi Tu Ye, Mohammed Alsobay, and 3 more authorsarXiv [cs.HC], 2024In an online experiment with N = 1893 participants, we collected and analyzed over 18,000 prompts and over 300,000 images to explore how the importance of prompting will change as the capabilities of generative AI models continue to improve. Each participant in our experiment was randomly and blindly assigned to use one of three text-to-image diffusion models: DALL-E 2, its more advanced successor DALL-E 3, or a version of DALL-E 3 with automatic prompt revision. Participants were then asked to write prompts to reproduce a target image as closely as possible in 10 consecutive tries. We find that task performance was higher for participants using DALL-E 3 than for those using DALL-E 2. This performance gap corresponds to a noticeable difference in the similarity of participants’ images to their target images, and was caused in equal measure by: (1) the increased technical capabilities of DALL-E 3, and (2) endogenous changes in participants’ prompting in response to these increased capabilities. More specifically, despite being blind to the model they were assigned, participants assigned to DALL-E 3 wrote longer prompts that were more semantically similar to each other and contained a greater number of descriptive words. Furthermore, while participants assigned to DALL-E 3 with prompt revision still outperformed those assigned to DALL-E 2, automatic prompt revision reduced the benefits of using DALL-E 3 by 58%. Taken together, our results suggest that as models continue to progress, people will continue to adapt their prompts to take advantage of new models’ capabilities.

-

The RealHumanEval: Evaluating Large Language Models’ Abilities to Support ProgrammersHussein Mozannar, Valerie Chen, Mohammed Alsobay, and 7 more authorsarXiv, Apr 2024

The RealHumanEval: Evaluating Large Language Models’ Abilities to Support ProgrammersHussein Mozannar, Valerie Chen, Mohammed Alsobay, and 7 more authorsarXiv, Apr 2024Evaluation of large language models (LLMs) for code has primarily relied on static benchmarks, including HumanEval (Chen et al., 2021), which measure the ability of LLMs to generate complete code that passes unit tests. As LLMs are increasingly used as programmer assistants, we study whether gains on existing benchmarks translate to gains in programmer productivity when coding with LLMs, including time spent coding. In addition to static benchmarks, we investigate the utility of preference metrics that might be used as proxies to measure LLM helpfulness, such as code acceptance or copy rates. To do so, we introduce RealHumanEval, a web interface to measure the ability of LLMs to assist programmers, through either autocomplete or chat support. We conducted a user study (N=213) using RealHumanEval in which users interacted with six LLMs of varying base model performance. Despite static benchmarks not incorporating humans-in-the-loop, we find that improvements in benchmark performance lead to increased programmer productivity; however gaps in benchmark versus human performance are not proportional – a trend that holds across both forms of LLM support. In contrast, we find that programmer preferences do not correlate with their actual performance, motivating the need for better, human-centric proxy signals. We also open-source RealHumanEval to enable human-centric evaluation of new models and the study data to facilitate efforts to improve code models.

2023

-

The Effects of Group Composition and Dynamics on Collective PerformanceAbdullah Almaatouq, Mohammed Alsobay, Ming Yin, Duncan J WattsTop. Cogn. Sci., Nov 2023

The Effects of Group Composition and Dynamics on Collective PerformanceAbdullah Almaatouq, Mohammed Alsobay, Ming Yin, Duncan J WattsTop. Cogn. Sci., Nov 2023As organizations gravitate to group-based structures, the problem of improving performance through judicious selection of group members has preoccupied scientists and managers alike. However, which individual attributes best predict group performance remains poorly understood. Here, we describe a preregistered experiment in which we simultaneously manipulated four widely studied attributes of group compositions: skill level, skill diversity, social perceptiveness, and cognitive style diversity. We find that while the average skill level of group members, skill diversity, and social perceptiveness are significant predictors of group performance, skill level dominates all other factors combined. Additionally, we explore the relationship between patterns of collaborative behavior and performance outcomes and find that any potential gains in solution quality from additional communication between the group members are outweighed by the overhead time cost, leading to lower overall efficiency. However, groups exhibiting more “turn-taking” behavior are considerably faster and thus more efficient. Finally, contrary to our expectation, we find that group compositional factors (i.e., skill level and social perceptiveness) are not associated with the amount of communication between group members nor turn-taking dynamics.

2021

-

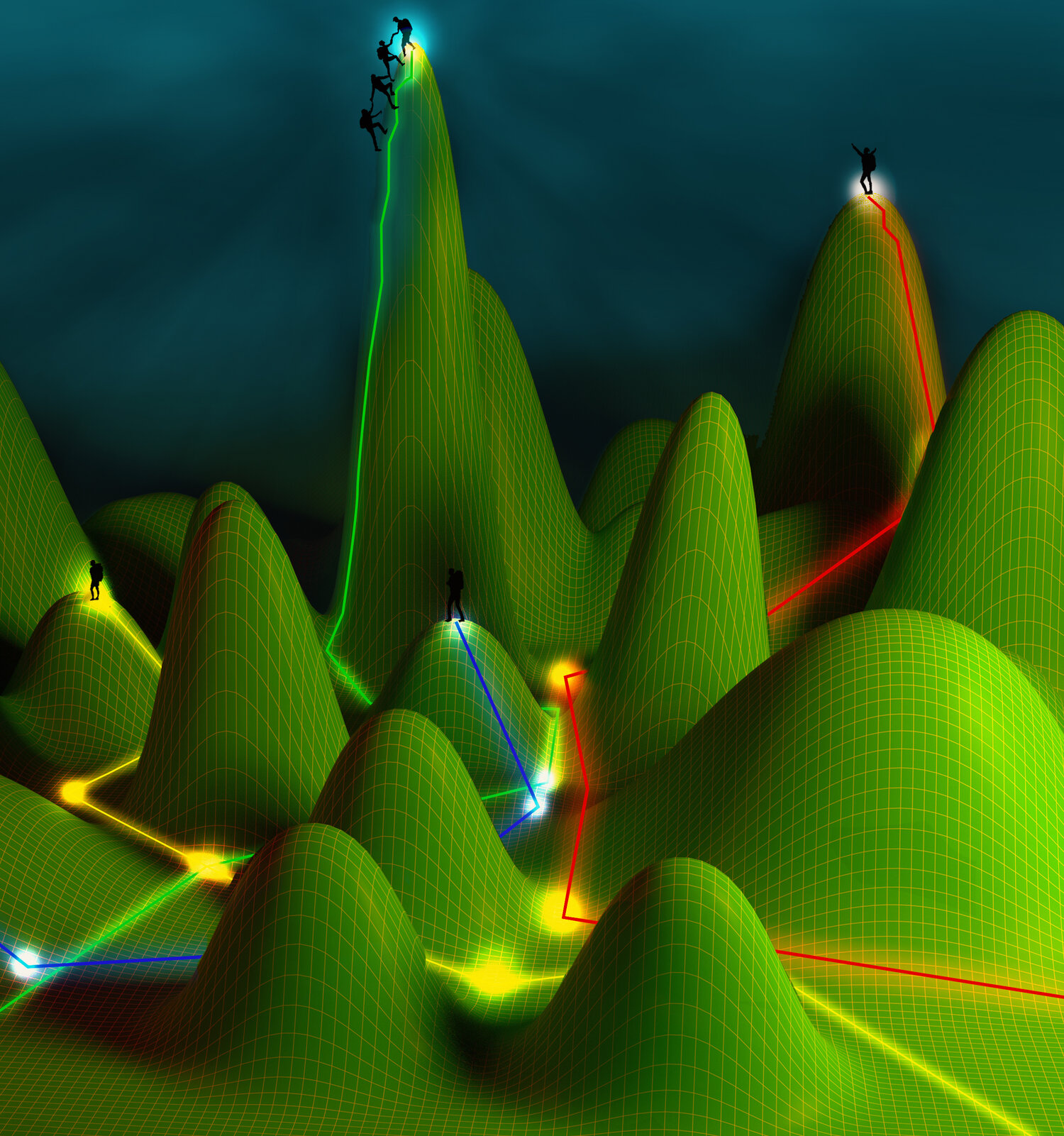

Task complexity moderates group synergyAbdullah Almaatouq, Mohammed Alsobay, Ming Yin, Duncan J WattsProc. Natl. Acad. Sci. U. S. A., Sep 2021

Task complexity moderates group synergyAbdullah Almaatouq, Mohammed Alsobay, Ming Yin, Duncan J WattsProc. Natl. Acad. Sci. U. S. A., Sep 2021Complexity-defined in terms of the number of components and the nature of the interdependencies between them-is clearly a relevant feature of all tasks that groups perform. Yet the role that task complexity plays in determining group performance remains poorly understood, in part because no clear language exists to express complexity in a way that allows for straightforward comparisons across tasks. Here we avoid this analytical difficulty by identifying a class of tasks for which complexity can be varied systematically while keeping all other elements of the task unchanged. We then test the effects of task complexity in a preregistered two-phase experiment in which 1,200 individuals were evaluated on a series of tasks of varying complexity (phase 1) and then randomly assigned to solve similar tasks either in interacting groups or as independent individuals (phase 2). We find that interacting groups are as fast as the fastest individual and more efficient than the most efficient individual for complex tasks but not for simpler ones. Leveraging our highly granular digital data, we define and precisely measure group process losses and synergistic gains and show that the balance between the two switches signs at intermediate values of task complexity. Finally, we find that interacting groups generate more solutions more rapidly and explore the solution space more broadly than independent problem solvers, finding higher-quality solutions than all but the highest-scoring individuals.